Table of Content

Ideally, the cluster of servers behind the load balancer shouldn't be session-aware, in order that if a shopper connects to any backend server at any time the person expertise is unaffected. This is usually achieved with a shared database or an in-memory session database like Memcached. An important concern when working a load-balanced service is how to deal with information that should be kept across the multiple requests in a user's session.

Docker Swarm has a local load balancer set up to run on every node to deal with inbound requests as well as internal requests between nodes. Non-weighted algorithms make no such distinctions, instead of assuming that every one servers have the same capability. This method speeds up the load balancing course of nevertheless it makes no lodging for servers with completely different levels of capability. As a result, non-weighted algorithms can't optimize server capability. Review the shopper's network and practices looking for opportunities to enhance network performance, efficiency and reliability by utilizing the server load balancing platform.

Array’s Server Load Balancer

This Network Engineer will be engaged on leading edge server load balancing network design and operations. This place will help various clients inside Health Solutions. A Wide vary of load balancing algorithms can be used in HAproxy and the algorithms are chosen based on our requirements. This methodology of load balancing distributes transport-level site visitors via routing choices. To make this happen, web sites must have the flexibility to accommodate a large no. of site visitors without overloading servers. Fortunately, there might be the notion of server load balancing, which aids within the managing of enormous site visitors on web sites.

The internet front-end includes the graphical consumer interface of a net site or application. The use of load balancing requires further configuration in maintaining a steady connection between the client and server. And also you'll find a way to reconfigure the load balancer if there is an array change within the downstream cluster. If there is a failure in sending visitors to two or extra servers and one server fails.

Least Response Time

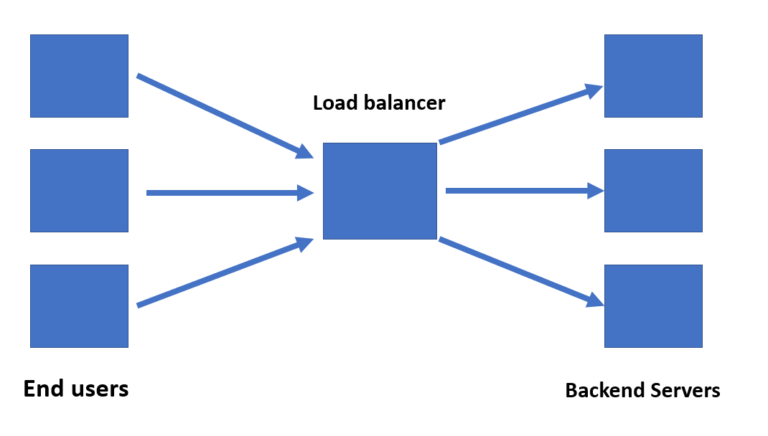

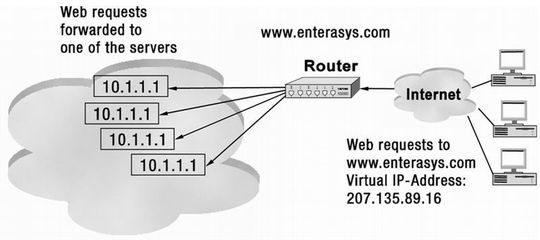

Load balancers ought to solely forward traffic to “healthy” backend servers. In the example illustrated above, the user accesses the load balancer, which forwards the user’s request to a backend server, which then responds directly to the user’s request. In this scenario, the one level of failure is now the load balancer itself.

A slow, faulty network can impression important business providers and lead to a poor end-user experience. For example, Terminix, a world pest control brand, makes use of Gateway Load Balancer to handle 300% extra throughput. Second Spectrum, an organization that provides synthetic intelligence-driven tracking technology for sports broadcasts, makes use of AWS Load Balancer Controller to cut back hosting costs by 90%. Code.org, a nonprofit devoted to expanding access to pc science in colleges, uses Application Load Balancer to deal with a 400% spike in visitors efficiently during online coding events.

Butuh Software Manajemen Untuk Bisnis Anda?

This will be succesful of keep away from overload on the server as a result of amount of visitors received. This methodology looks for servers that serve the least quantity of visitors based on the size of the bandwidth speed, which is megabits per second or Mbps. The disadvantage of this algorithm is that it does not consider the load and traits of every server. This method assumes that each server has the identical capabilities and specifications. IP Hash– The IP tackle of the client is used to find out which server receives the request. If you have a configuration where authentication servers are a superset of accounting servers, the preferred server isn't used.

By default, load balancing just isn't enabled on the RADIUS server group. By default, if two RADIUS servers are configured in a server group, only one is used. The other server acts as standby, if the primary server is said as useless, the secondary server receives all of the load. The documentation set for this product strives to make use of bias-free language.

Details About Radius Server Load Balancing

All public servers that are part of the RADIUS server group are then load balanced. Perform on-going administration of server load balancing server installations and improve initiatives and keep deployment schedules. We use a major load balancer in lively mode and the secondary load balancer in passive mode. A server load balancer screens your website and routinely detects and blocks undesirable exercise earlier than they cause hurt. Least Connections — Least Connections means the load balancer will select the server with the least connections and is beneficial when traffic ends in longer sessions. The fail_timeout also determines how lengthy the server should be retained as failed.

Execution of the newly added servers during heavy site visitors enhances the appliance performance and the new resources get automatically augmented to the load balancer. To configure NGINX as a load balancer in the HTTP part, it's required to specify a set of backend servers with an upstream block. NGINX is a well-liked web server software that's installed to boost the server’s useful resource availability and efficiency. In any load balancer, NGINX usually acts as a single entry level to a distributed net utility executing on a quantity of separate servers. Distribute the incoming requests or network load effectually among multiple servers and work to reinforce the performance.

Static Load Distribution Without Prior Information

Changing which server receives requests from that shopper in the course of the purchasing session may cause efficiency points or outright transaction failure. In such cases, it is essential that all requests from a shopper are despatched to the same server for the period of the session. To cost‑effectively scale to meet these high volumes, trendy computing finest follow typically requires adding more servers. Load balancing refers to effectively distributing incoming community site visitors across a gaggle of backend servers, also recognized as a server farm or server pool. Network load balancing also offers community redundancy and failover. If a WAN hyperlink suffers an outage, redundancy makes it possible to nonetheless access community sources through a secondary hyperlink.

Several implementations of this concept exist, outlined by a task division mannequin and by the rules figuring out the change between processors. Especially in large-scale computing clusters, it isn't tolerable to execute a parallel algorithm that can't stand up to the failure of one single part. Therefore, fault tolerant algorithms are being developed which might detect outages of processors and get well the computation.

Based on the capabilities, the server load stability operates inside the main categories of load balancing. In today’s digital period, effective management of the high-volume traffic in websites/web servers has turn into a very crucial factor. If a website/web application receives too many requests, it just turns into overloaded. Load balancing could be helpful in functions with redundant communications hyperlinks. For example, an organization might have multiple Internet connections making certain network entry if one of the connections fails. A failover association would mean that one hyperlink is designated for normal use, whereas the second hyperlink is used only if the first link fails.

No comments:

Post a Comment